AI is making remarkable progress

Over the last few decades, artificial intelligence has undergone a remarkable expansion in many fields of application:

- image recognition

- speech processing (Siri, Alexa, Sounhound)

- Natural language (translation, synthesis, summaries, Q&A, etc.)

- Games (draughts, chess, bricks, go, poker, starcraft, etc.)

- Decision support (banking, finance, health, etc.)

- Recommendations, personalised advertising, etc.

- Science (AlphaFold, astrophysics, etc.)

However, with the advent of generative AI, flaws are appearing as progress accelerates. Bertrand Braunschweig sums up the “five walls of AI” – the factors on which AI has an impact – including trust, energy, security, human-machine interaction and inhumanity. In addition, large language models (LLMs) that rely heavily on deep learning, such as ChatGPT, can be a source of errors and generate :

- Toxicity

- Stereotype bias, fairness

- Lack of robustness against adversarial attacks / OOD

- Failure to respect privacy

- Ethical problems

- Hallucinations (inventions)

- Non-alignment with user decisions

Towards trusted AI

The draft European regulation on artificial intelligence, currently being negotiated, proposes that AI systems should be analysed and classified according to the risk they pose to users.

“Trust is the main issue in European regulation”, Bertrand Braunschweig points out.

The principles laid down by the European Union aim to ensure that AI systems used in the EU are safe, transparent, traceable, non-discriminatory and environmentally friendly. In addition, for an AI system to be considered responsible and trusted, these three dimensions need to be taken into account, the expert points out:

The technological dimension, encompassing the robustness and reliability of the systems. Interactions with individuals, including aspects such as explicability, monitoring, responsibility and transparency. The social dimension, addressing issues of privacy and fairness.

The European Parliament defines artificial intelligence systems as “computer systems that are designed to operate with different levels of autonomy and that can, for explicit or implicit purposes, generate results such as predictions, recommendations or decisions that influence physical or virtual environments.

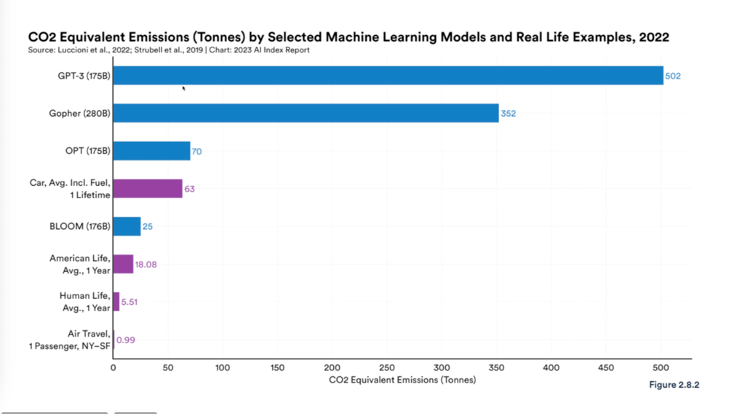

Carbon emission rates generated by ChatGPT3

After outlining the technological advances and liability issues surrounding AI, Bertrand Braunschweig examined the current and future challenges facing artificial intelligence. These challenges include respect for personal data, the responsibility of AI, and the environmental and energy impact associated with the development of these tools. On the last point, it is estimated that artificial intelligence systems are significant emitters of carbon dioxide. By way of example, GPT-3 generated around 500 tonnes of CO2 emissions in 2022. These issues underline the crucial importance of providing appropriate solutions.

The Positive AI Community, a forum for exchange between members of the association

This event – like those to be held in the near future – is in line with the association’s commitment to creating a forum for exchanging and sharing best practice. The aim of the Positive AI Community is to become the community for all ethical AI issues, so that we can make collective progress on the application of responsible artificial intelligence within organisations.

Would you like to join the Positive AI initiative and benefit from upcoming Positive AI Community events? Join us!